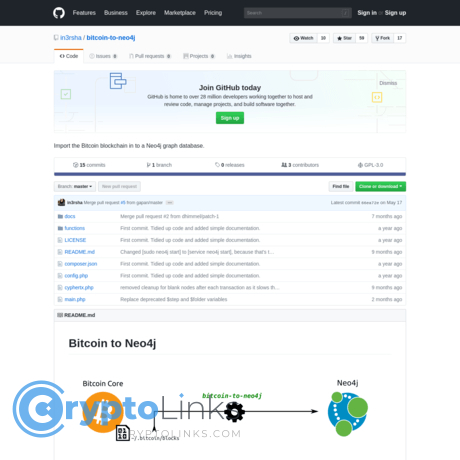

Bitcoin to Neo4j Review

Bitcoin to Neo4j

github.com

Bitcoin to Neo4j Review Guide: Everything You Need to Know + FAQ

Ever tried to trace a Bitcoin flow across a few hops and felt like you were untangling spaghetti with chopsticks? If you’ve wrestled with CSV exports, SQL joins across UTXO tables, or clunky block explorers, you know how painful it is to ask graph-style questions of blockchain data.

That’s why I’m taking a close look at bitcoin-to-neo4j—a project that promises to turn the Bitcoin ledger into a graph you can explore with Cypher in Neo4j. If you care about following money flows, finding clusters, or running path queries that answer “who touched whom, when, and how often,” this might be exactly what you’ve been missing.

The problems this tool tries to solve

Most teams don’t struggle to get “some” data—they struggle to get the right shape of data. Bitcoin is inherently a graph: transactions create outputs that become inputs to other transactions across time. Yet many people still store it in relational tables that are perfect for accounting totals and terrible for pathfinding.

- Parsing raw blockchain data is slow and messy. Bitcoin Core gives you blocks and transactions, but turning that into a clean, link-rich dataset is a project in itself. One off-by-one in mapping inputs to previous outputs, and your analysis is toast.

- Relational databases don’t love path questions. Multi-hop queries (“show me all addresses within 3 hops of this address between January and March”) become expensive chains of joins. In a graph database, this is the bread and butter.

- Most people just want a usable graph—fast. Building your own ETL from scratch means weeks of work before you can even ask your first question. That’s the gap a purpose-built loader like bitcoin-to-neo4j aims to close.

Real-world pain point: I once tried to track funds that moved through ~17 transactions across a weekend. In SQL, the joins exploded. In Neo4j, a variable-length path with a few constraints made it practical—and, more importantly, repeatable.

This approach isn’t just theory. Graph methods are widely used in blockchain research and investigations. For example, academic work like “A Fistful of Bitcoins” (Meiklejohn et al., 2013) popularized address clustering and flow tracing, and graph tooling has only gotten better since. On the engineering side, Neo4j’s graph model is a common pick for anti-fraud and AML-style link analysis precisely because path queries and centrality measures are first-class citizens.

What I promise you’ll get from this guide

- A plain-English overview of what bitcoin-to-neo4j does and when it shines

- The graph model it builds so you can write smarter Cypher from day one

- Setup steps that cut out guesswork and help you avoid slow re-runs

- Performance tips that matter (and the ones that don’t)

- Common pitfalls and how to steer around them

- A straight answer on whether it fits your use case—or if you should pick an alternative

Who this is for (and who it’s not for)

- Great fit if you:

- Want to run graph queries (paths, clusters, hubs) over Bitcoin data

- Care about traceability for research, forensics, or analytics

- Prefer full control of your data inside your own Neo4j instance

- Probably not ideal if you:

- Need real-time indexing or streaming alerts out of the box

- Require multi-chain support today (ETH, LTC, etc.)

- Want a turnkey hosted SaaS without running any infrastructure

If you’ve been leaning on block explorers and screenshots for investigations, moving to a graph you own is a different world. You can ask: “Within 4 hops of this txid, who are the top receivers in the last 90 days?” or “Which addresses form tight communities around this exchange’s cluster?”—and rerun that analysis anytime.

What we’ll cover at a glance

- Repo basics and the high-level idea behind turning Bitcoin into a graph

- The data model—what nodes and relationships you’ll actually get

- Install and setup, from prerequisites to your first checks in Neo4j Browser

- Starter queries you’ll use on day one (and why they work)

- Performance, hardware, and cost considerations that save time

- FAQ: import time, node requirements, OS support, maintenance, safety

- Alternatives if you need hosted, multi-chain, or ready-made ETL

If a graph-native view of the Bitcoin ledger sounds like the missing piece, you’re in the right place. Ready to see what this tool actually is and why people use it?

What is bitcoin-to-neo4j and why people use it

bitcoin-to-neo4j is a straightforward idea with outsized impact: take the raw Bitcoin blockchain and load it into Neo4j so you can ask graph questions with Cypher—fast. Instead of sifting through block files or bending SQL into knots, you get a clean network of transactions, addresses/outputs, and blocks that you can explore like a map.

“The moment you see the chain as a graph, the story of the money starts telling itself.”

That’s the magic here. You’re not reinventing an ETL pipeline. You’re loading a ready-to-query graph and focusing on the questions that matter: who sent funds, where they went next, and what patterns emerge across time.

The core idea in one line

Turn the Bitcoin ledger into a queryable graph made of nodes (blocks, transactions, addresses/outputs) and relationships (inputs, outputs, containment, and spends). In practical terms, this means you can run Cypher queries like “show me the paths of value from X to Y” instead of writing custom parsers or scraping RPC calls.

Who benefits the most

If you live off patterns and paths, this tool pays for itself in hours saved. I see the biggest wins for:

- Chain analysts who track flows between entities and want quick pathfinding across multiple hops.

- Academics studying transaction graphs, address clustering, and temporal network dynamics. Classic research like “A Fistful of Bitcoins” (Meiklejohn et al., 2013) showed how graph heuristics reveal structure in the Bitcoin economy—this setup lets you replicate and extend that work.

- Compliance teams validating counterparty exposure with time-bounded queries and interaction frequency checks.

- Data scientists who want to test heuristics (e.g., multi-input clustering) or generate features for ML models without building a blockchain parser first.

- Product builders prototyping analytics, alerts, or visual stories with Neo4j Bloom or the Neo4j Browser.

Real example: say you’ve got a suspect address from a public incident report. With the graph loaded, you can trace outbound UTXOs, see fan-out patterns, and check whether funds land on known hubs in just a few queries. No RPC juggling. No CSV wrangling.

What you can do once it’s loaded

This is where it gets fun. With the chain in Neo4j, you can:

- Trace transactions from a source txid or address across multiple hops, optionally within a time window.

- Spot hubs and super-connectors by counting inbound/outbound edges and ranking degree centrality.

- Map relationships between addresses via shared spend patterns or repeated co-appearance in transactions (with the usual heuristic caveats).

- Identify potential clusters using the common multi-input heuristic popularized in academic work—then test how stable those clusters are over time.

- Build visual stories in Neo4j Browser or Bloom, turning complex money trails into shareable, understandable screenshots or walkthroughs.

Example scenarios I’ve run:

- Follow-the-money: start at a known txid, follow N hops, and flag when a path touches an exchange-labeled cluster you maintain in a side label or property.

- Interaction heatmaps: count how often an address interacts with a set of watchlist nodes over rolling 30-day windows.

- Behavioral fingerprints: search for addresses that show the same “fan-out then consolidation” pattern seen in historical cases.

These aren’t pie-in-the-sky ideas; they’re the daily bread of graph analysis. And when the data is modeled as nodes and relationships from the start, Cypher makes them feel natural.

Limitations to keep in mind

It’s powerful, but it’s not a silver bullet. A few realities to plan for:

- Bitcoin-first: This is built for Bitcoin. If you need multi-chain, you’ll likely pair it with other ETL tools or separate graphs.

- Batch import orientation: Expect bulk loading rather than millisecond real-time updates. You can schedule refreshes, but true streaming is another project.

- Hardware and storage: Full-history graphs are big. SSDs and proper Neo4j tuning make a night-and-day difference.

- Maintenance status: Always check the repo’s last commits, open issues, and forks. Pin working versions of Neo4j and dependencies to avoid surprises when you upgrade.

- Heuristic caution: Address clustering is useful but not perfect. As the Meiklejohn paper and follow-up studies note, heuristics can mis-group or miss entities—treat results as leads, not verdicts.

None of these are dealbreakers. They’re just the rules of the game when you run your own analytics stack.

Here’s the kicker: the real power comes from the specific nodes and relationships the importer creates. Get that mental model right and your queries stay fast and accurate. Want the exact map key—blocks, transactions, outputs, and how they link—so you can ask smarter questions from the start?

How it works: data model and relationships

I think of the Bitcoin ledger as a massive conversation. Blocks are the chapters, transactions are the sentences, and outputs are the words that get reused in new sentences. Once you load it into Neo4j, that conversation becomes something you can search, filter, and follow like a detective.

“Patterns are where the truth hides.”

Here’s the mental model I use when I’m writing Cypher and tuning for speed.

Main node types you’ll see

Expect a compact set of labels that map neatly to Bitcoin’s UTXO model. Typical properties are chosen to keep lookups fast and math precise.

- :Block

- Properties: hash, height, time (epoch), size_bytes, tx_count, prev_hash

- Why it exists: Fast time/range filters and pathing through chain order

- :Transaction

- Properties: txid, locktime, version, fee_sats, size_bytes, weight, vsize, coinbase (bool), time

- Why it exists: Anchor for inputs and outputs; gateway for flow analysis and heuristics

- :Output (UTXO, spent or unspent)

- Properties: txid, n (vout index), value_sats (integer), script_type (e.g., p2pkh, p2sh, p2wpkh, p2wsh), spent (bool), spent_time

- Why it exists: The atomic “money” unit; connects past to future when it’s spent

- :Address

- Properties: address, addr_type (legacy, segwit), valid (bool)

- Why it exists: Lets you group outputs by receiving address; note that not every script cleanly maps to a classic address

Some importers skip separate input nodes and encode inputs as relationships from previous outputs to the new spending transaction. That keeps the graph lean and queries fast.

Key relationships

You’ll use these edges constantly. Their direction mirrors “how value flows.”

- (b:Block)-[:CONTAINS]->(t:Transaction)

Block membership and ordering context. - (t:Transaction)-[:CREATED]->(o:Output)

Outputs born from a transaction. - (o:Output)-[:SPENT_IN]->(t:Transaction)

When an output gets used as an input to a later transaction. - (o:Output)-[:LOCKED_TO]->(a:Address)

Address association for human-friendly lookups and grouping. - (b1:Block)-[:NEXT]->(b2:Block) (optional)

Chain traversal without recomputing prev/next every time.

Coinbase outputs show up as Transaction nodes with coinbase = true and the usual CREATED edges. That’s handy when you want to exclude miner rewards from certain analyses.

Indexes and constraints

Indexes make or break performance on large graphs. A sensible baseline looks like this:

- Unique constraints

- :Block(hash) and :Block(height)

- :Transaction(txid)

- Composite on :Output(txid, n)

- :Address(address)

- Supporting indexes

- :Transaction(time) for time-bounded scans

- :Output(spent) for UTXO vs. spent splits

- :Output(script_type) when you run script-level stats

Constraints prevent accidental duplicates during re-runs or reorg handling, and they keep path queries snappy at mainnet scale. Create the heavy uniqueness constraints first, then bulk load, then add secondary indexes you’ll actually use.

Where the data comes from

The importer usually reads from a Bitcoin Core node (RPC or raw block files) or a prepared export. It maps:

- Block header fields → :Block properties

- Transaction metadata → :Transaction properties

- vout entries → :Output nodes, plus CREATED edges

- vin references → SPENT_IN edges from old :Output to the new :Transaction

- Script parsing → LOCKED_TO edges where the address can be derived (P2PKH, P2SH, SegWit). Nonstandard scripts are still represented as outputs, just without a clean address.

The UTXO model is preserved: value never teleports—it always travels Output → Transaction → Output. That’s what makes Neo4j such a natural fit.

What this means for your queries

Once the graph is built, you can ask high-signal questions with short, readable patterns. A few practical examples I use all the time:

- “What did this address receive?”

MATCH (a:Address {address: "bc1q..."})<-[:LOCKED_TO]-(o:Output)<-[:CREATED]-(t:Transaction)

RETURN t.txid AS txid, o.value_sats AS sats, t.time

ORDER BY t.time DESC

LIMIT 25; - “Is there a direct payment path from A to B?” (one-hop through a transaction)

MATCH (a1:Address {address: "addrA"})<-[:LOCKED_TO]-(:Output)-[:SPENT_IN]->(t:Transaction)

MATCH (t)-[:CREATED]->(o2:Output)-[:LOCKED_TO]->(a2:Address {address: "addrB"})

RETURN t.txid AS via_tx, o2.value_sats AS amount_sats, t.time

LIMIT 10; - “Who tends to co-spend together?” (common-input heuristic)

MATCH (in1:Output)-[:LOCKED_TO]->(a1:Address),

(in2:Output)-[:LOCKED_TO]->(a2:Address),

(in1)-[:SPENT_IN]->(t:Transaction)<-[:SPENT_IN]-(in2)

WHERE a1 <> a2

RETURN a1.address AS addr1, a2.address AS addr2, count(DISTINCT t) AS times_together

ORDER BY times_together DESC

LIMIT 20;Note: This heuristic is well known in the literature (e.g., Meiklejohn et al., 2013) but can be confounded by CoinJoin and privacy tools. Use with care and consider script and value patterns for false-positive reduction.

- “Show flows during a time window.”

MATCH (o:Output)<-[:CREATED]-(t:Transaction)

WHERE t.time >= 1609459200 AND t.time < 1612137600 // Jan 2021

MATCH (o)-[:LOCKED_TO]->(a:Address)

RETURN a.address, sum(o.value_sats) AS total_in_jan_2021

ORDER BY total_in_jan_2021 DESC

LIMIT 50;

Why this works: in a graph, the “who paid whom” story is a path, not a join-jungle. Neo4j turns that path into a few arrows, which keeps your head clear when you’re following funds across many hops or gating analyses by time, script type, or coinbase status.

Research backs this structure. Academic work on Bitcoin transaction graphs shows how UTXO-level modeling enables clustering and flow-based inference at scale—see “A Fistful of Bitcoins” (Meiklejohn et al., 2013) for foundational heuristics and GraphSense for open methodologies. The importer’s graph-first approach is built for those patterns, without you writing ETL from scratch.

There’s a moment during analysis when you watch a single transaction fan out into dozens of outputs and then reconverge a few hops later. It feels less like you’re reading a ledger and more like you’re watching a story unfold. That’s the power of this data model.

Ready to see this model running on your machine and fire off your first queries? In the next section, I’ll show you what you need, how to install it, and the fastest way to check it’s working—want the exact commands and a quick sanity test?

Setup: prerequisites, install, and first queries

If you’ve ever stared at raw blk*.dat files and felt that knot in your stomach, I’ve been there. Spinning up bitcoin-to-neo4j the right way turns that dread into “oh wow, this is actually workable.” Here’s exactly how I set it up, what to check, and a handful of Cypher queries to prove it’s humming.

“Amateurs talk strategy; professionals talk logistics.” — In Bitcoin graph work, setup is your edge.

Prerequisites checklist

- Neo4j installed and running. Community or Enterprise works. Get it from neo4j.com/download. Make sure you can open Neo4j Browser at http://localhost:7474 and connect via bolt://localhost:7687.

- Disk/RAM: SSD strongly recommended. For anything beyond testnet, plan for a lot of disk. Neo4j benefits from a tuned heap and page cache (Neo4j suggests page cache near your hot data size; see official guidance).

- Git to clone the repo: github.com/in3rsha/bitcoin-to-neo4j.

- Runtime dependencies required by the repo (e.g., Python/Go/Node). These are listed in the project README—install exactly what it asks for.

- Bitcoin data source:

- Bitcoin Core full node with server=1, txindex=1, and RPC enabled. See full node guide. Txindex helps the importer fetch any historical transaction quickly.

- or a prepared export the repo supports (some setups can ingest from CSV/JSON or a block dump). Check the README for format expectations.

- Credentials: Neo4j username/password; Bitcoin Core RPC user/pass and host/port.

- Optional: APOC procedures in Neo4j if the importer or your later queries benefit from it (APOC is popular for utilities).

Install and configure in steps

- Clone the repository:

git clone https://github.com/in3rsha/bitcoin-to-neo4j

cd bitcoin-to-neo4j - Create a local config (often a .env or config file). Typical values look like:

- NEO4J_URI=bolt://localhost:7687

- NEO4J_USER=neo4j

- NEO4J_PASS=your_password

- BITCOIN_RPC_HOST=127.0.0.1

- BITCOIN_RPC_PORT=8332 (18332 for testnet)

- BITCOIN_RPC_USER=rpcuser

- BITCOIN_RPC_PASS=rpcpassword

- NETWORK=mainnet (or testnet/regtest if supported)

Names vary by repo—use the README’s exact keys. If the tool supports reading raw block files instead of RPC, you might set a BITCOIN_DATADIR path instead.

- Start Neo4j and confirm connection with Neo4j Browser.

- Create basic indexes/constraints before heavy queries (many importers do this for you, but if not, these patterns are common):

CREATE CONSTRAINT tx_txid IF NOT EXISTS FOR (t:Transaction) REQUIRE t.txid IS UNIQUE;

CREATE CONSTRAINT addr_address IF NOT EXISTS FOR (a:Address) REQUIRE a.address IS UNIQUE;

CREATE INDEX out_outpoint IF NOT EXISTS FOR (o:Output) ON (o.outpoint);

CREATE INDEX block_hash IF NOT EXISTS FOR (b:Block) ON (b.hash);These keep duplicates out and make lookups snappy.

Running the import

This is the moment of truth. Expect mainnet to take a long time. Testnet or a height-limited run is perfect for validation.

- Kick it off with the repo’s loader command. You’ll see something like:

./run-import --network=mainnet --from-height=0 --to-height=latest

In some repos it’s a Python/Go script, or a CSV builder followed by neo4j-admin database import (offline bulk mode). Follow the README’s exact command. Bulk import usually requires stopping Neo4j during the import and then restarting once done.

- Monitor logs: watch for connection/auth errors first, then memory messages. If the importer supports checkpoints, you can resume after interruptions without starting from scratch.

- If you see slowness: pause, check that txindex=1 is enabled in Bitcoin Core, your SSD isn’t throttling, and your indexes/constraints aren’t being created repeatedly during import.

Verifying in Neo4j Browser

Once you’ve ingested a slice, hop into Neo4j Browser and sanity-check the graph.

- Total node and relationship counts (a quick gut check):

MATCH (n) RETURN count(n) AS nodes;

MATCH ()-[r]->() RETURN count(r) AS relationships; - See labels and relationship types:

CALL db.labels();

CALL db.relationshipTypes(); - Check indexes and constraints:

SHOW INDEXES; SHOW CONSTRAINTS;

- Validate a known txid and neighbors (paste one from a block explorer to be sure it matches):

MATCH (t:Transaction {txid: $txid})-[:CREATES]->(o:Output) RETURN t, o LIMIT 25;

MATCH (t:Transaction {txid: $txid})<-[:SPENT_BY]-(o:Output) RETURN o LIMIT 25;

Starter Cypher queries

Data model names differ slightly across importers. Adjust label/property names to match what the repo creates. These patterns are what I usually start with:

- Find a transaction by txid

MATCH (t:Transaction {txid: $txid})

OPTIONAL MATCH (t)-[:CREATES]->(o:Output)

OPTIONAL MATCH (t)<-[:SPENT_BY]-(i:Output)

RETURN t, collect(DISTINCT i) AS inputs, collect(DISTINCT o) AS outputs; - List outputs to an address

MATCH (a:Address {address: $address})<-[:PAY_TO]-(o:Output)<-[:CREATES]-(t:Transaction)

RETURN t.txid AS txid, o.value_sats AS value_sats, o.index AS vout

ORDER BY value_sats DESC LIMIT 50; - Follow N hops from an address (spend chain up to 3 hops)

MATCH (a:Address {address: $address})<-[:PAY_TO]-(o1:Output)

MATCH p = (o1)-[:SPENT_BY]->(t1:Transaction)-[:CREATES]->(o2:Output)

OPTIONAL MATCH p2 = (o2)-[:SPENT_BY*0..2]->(:Transaction)-[:CREATES]->(:Output)

RETURN p, p2 LIMIT 25; - Aggregate inflows by month (requires block time on transactions or blocks)

MATCH (a:Address {address: $address})<-[:PAY_TO]-(o:Output)<-[:CREATES]-(t:Transaction)<-[:CONTAINS]-(b:Block)

WITH date(datetime({epochSeconds: b.time})) AS d, o.value_sats AS v

RETURN d.year AS year, d.month AS month, sum(v) AS sats_in

ORDER BY year, month; - Spot large hubs (lots of outputs sent to the same address)

MATCH (a:Address)<-[:PAY_TO]-(o:Output)

WITH a, count(o) AS rc

WHERE rc > 1000

RETURN a.address AS address, rc AS outputs_received

ORDER BY outputs_received DESC LIMIT 20;

If your importer uses [:INPUT] and [:OUTPUT] relationships instead, the logic’s the same—connect prior outputs to new transactions, then link new outputs to addresses.

Optional: testnet/regtest

I like proving the pipeline on smaller networks first—it’s faster, safer, and helps you tune settings before mainnet.

- Testnet: run Bitcoin Core with -testnet -txindex=1, adjust RPC port (18332), and set NETWORK=testnet in the importer config. You’ll pull a fraction of mainnet’s data, ideal for dry runs.

- Regtest: fully local lab. Start with -regtest -txindex=1. Generate blocks and transactions:

bitcoin-cli -regtest createwallet mylab

bitcoin-cli -regtest -generate 101

bitcoin-cli -regtest sendtoaddress1.0 Set NETWORK=regtest and import. You control every block—perfect for repeatable tests.

Quick reality check: how much RAM should you assign to Neo4j’s page cache, when should you build indexes, and what actually speeds up the import the most? Want the short version or the nerd knobs I use when I need results fast?

Performance, costs, and tuning tips

Speed is a feature. When your Bitcoin graph crawls, your insights die. I’ve learned that most frustration with bitcoin-to-neo4j isn’t about “does it work?”—it’s about time, storage, and tuning. Here’s how I plan, what I actually use, and the little tweaks that keep imports and queries snappy.

“Fast data is kind to your curiosity.”

Hardware and storage expectations

If you’re loading the full Bitcoin history into Neo4j, plan like a realist. The graph footprint depends on the exact schema (how many node types and relationships you materialize), but you’re firmly in “serious storage” territory.

- Disks: NVMe SSDs aren’t optional; they’re the difference between hours and days. Keep at least 25–30% free space for compaction and transaction logs.

- RAM: 32 GB is the minimum I’m comfortable with; 64–128 GB makes imports and page cache much happier.

- CPU: 8+ modern cores help with batch ingest and concurrent queries. Imports are I/O-bound, but CPU still matters.

- Size planning: a full Bitcoin graph can span hundreds of gigabytes, and some models can push into low TBs. It varies with how many outputs/addresses you materialize and property payloads. Keep headroom.

- Pruned vs. full source: If the importer can work from a pruned node or prepared export, you’ll save disk on the Bitcoin side. The Neo4j store size is independent of your node being pruned—what matters is what you load.

For context, Neo4j’s own bulk importer can load billions of nodes/relationships on beefy hardware, as documented in Neo4j’s import guide. If the repo supports generating CSV for the offline importer, use it—it’s the fastest route. If it writes via the Bolt/HTTP driver, you’ll need extra patience and good batch settings.

Speeding up imports

Here’s the playbook that consistently shortens wall-clock time for me:

- Right-size memory: Set heap for planning (8–16 GB is plenty) and push the page cache high (50–70% of RAM). Typical split on a 64 GB box: heap 12–16 GB, page cache 32–40 GB.

- Disable expensive extras while loading:

- Turn off query logging (e.g., query log = false) and lower log verbosity.

- Drop or avoid building indexes/constraints until after the heavy ingest phase. Then create them and let Neo4j backfill.

- Batch sizes: Follow the repo defaults. If you can tune them, I usually land around 5k–20k per transaction batch for driver-based loaders. If you see timeouts or memory spikes, halve it.

- Commit timing: Shorter commits keep memory flatter; longer commits maximize throughput. Aim for commits that finish in 1–5 seconds under load.

- Fast filesystem and power: Use XFS or ext4 on Linux, disable disk encryption on the data volume, set vm.swappiness=1, and increase file descriptors (e.g., ulimit -n 1,000,000).

- Separate volumes: If possible, place transaction logs and database store on separate NVMe volumes to reduce write contention.

- Offline CSV import (if supported): Build CSV, then run the neo4j-admin database import tool. This routinely outperforms driver-based ingestion by an order of magnitude.

Monitoring matters. If your load rate collapses mid-import, it’s usually the page cache thrashing or GC churn. Watch disk utilization (iostat), CPU steal time (cloud), and GC pauses. If GC is busy, lower batch size or heap; if disk is pegged at 100%, you’re I/O-bound—no config will outrun a slow SSD.

Query performance once loaded

Once the graph is live, the real fun begins—as long as your queries are smart. Bitcoin graphs are dense in bursts and sparse elsewhere. Lean into that.

- Create targeted indexes and constraints:

- :Transaction(txid), :Block(height) or :Block(hash), :Address(address) are the usual suspects.

- Unique constraints prevent duplicates and speed up point lookups.

- Bound your searches in time and hops:

- Add WHERE tx.time >= $from AND tx.time < $to filters so Cypher can prune early.

- Avoid variable-length paths without an upper bound. Set something like [*..6] and justify it.

- Precompute the heavy stuff:

- Materialize daily inflow/outflow per address into summary nodes for reporting.

- Store “cluster” identifiers if you’re applying heuristics, so you don’t recompute on every query.

- Use the right patterns:

- Shortest path is great—but on Bitcoin graphs, always bound it by hops and time.

- Replace big OPTIONAL MATCH blocks with separate MATCH and apoc.coll.coalesce-style logic if you use APOC.

- Warm the cache: Hit common indexes at startup (addresses you always query). It’s low-effort, high-return.

If you plan to run algorithms (PageRank, WCC, community detection), the Graph Data Science library and in-memory graph projections are game-changers. Project only the subgraph you need (e.g., last N blocks or specific address ranges) and you’ll get interactive results instead of overnight runs.

Troubleshooting common issues

- Auth/connection errors: Double-check the Bolt URI (bolt://localhost:7687 by default), credentials, and whether encryption is required. On Neo4j 5, ensure driver versions match the server.

- Out-of-memory or GC storms: Shrink batch size, raise page cache, keep heap moderate (too-big heaps can make GC worse). If the OS starts swapping, you’re done—reduce memory pressure.

- Duplicate nodes or relationships: You’re probably missing a unique constraint or you’re reprocessing overlapping batches. Add constraints after load, or if the importer supports MERGE vs CREATE modes, pick the right one. Watch case sensitivity on addresses and ensure you don’t mix txid with wtxid.

- Mismatched versions: Neo4j 4 vs 5, APOC “core” vs “full,” driver versions—it all matters. Pin versions. If the repo is older, match it to the Neo4j it was built for.

- Transaction timeouts: Increase the server transaction timeout for imports, or reduce batch duration. Timeouts that hit at regular intervals usually mean disk stalls.

- File descriptor or “too many open files” errors: Raise ulimit -n and ensure your service unit (systemd) also has higher limits.

When in doubt, PROFILE your slow queries in Neo4j Browser to see where the planner is exploding. If the plan shows big “Expand(All)” steps across massive subgraphs, it’s your hint to add a filter, index, or a hop limit.

Safety and privacy notes

- Don’t expose Neo4j to the open internet: Lock down Bolt and HTTP ports to your VPN or VPC. Use strong passwords and rotate them.

- Enable TLS if you connect remotely. A lot of people skip this in the lab and forget it in production.

- Protect your Bitcoin RPC creds: Never hardcode them in scripts that could leak to logs or CI. Mask them in any shared screenshots.

- Be mindful with results: Clustering can imply identity. Share responsibly—graph screenshots can carry more metadata than you think.

If you’re cost-conscious, here’s a quick sanity check: local NVMe boxes will beat most cloud disks on price/performance, but managed clouds give you convenience. On AWS, instances with fast NVMe (e.g., i4i family) are solid picks; on-prem, a single workstation with 64–128 GB RAM and a good NVMe RAID can carry a serious Bitcoin graph. If you prefer published benchmarks, Neo4j’s import docs are a good baseline, and research like BlockSci and the GraphSense project give useful context on data volume and analysis patterns (even if their tech stack differs).

Curious about exact install time and disk numbers for your setup—or whether you really need a full node vs. a pruned node or export? I’ll answer that next, along with OS support and maintenance realities. Got a guess for how many hours a mainnet load will take on your hardware?

FAQ and what people usually ask

Do I need a full Bitcoin node? Can I use pruned or an export?

A full Bitcoin Core node is the safest bet if you want a complete, queryable graph. You’ll need access to all historical blocks to link inputs to their originating outputs; that’s impossible with a heavily pruned node.

- Full node (recommended): You get all blocks (blk*.dat) and chainstate, which lets the importer build end-to-end relationships without gaps.

- Pruned node: Fine only if your import scope fits within the prune window. Once old blocks are discarded, you can’t resolve historical inputs. For full-history graphs, pruned won’t cut it.

- Prepared exports/snapshots: Some teams feed the importer with a pre-extracted block/tx dataset (or a shared blocks folder) to skip the full sync step. This can work well, but format compatibility matters—always check the repo’s README for supported inputs and versions.

Quick check on your node: bitcoin-cli getblockchaininfo and look for "pruned": false. If it’s true and you need complete history, stop now and reconfigure.

Pro tip: If your goal is prototyping queries or building UI/heuristics, validate everything on testnet first, then go mainnet once your Cypher patterns and indexes look solid.

How long does the import take and how big is it?

It varies a lot by hardware, Neo4j tuning, and exactly what you load (indexes, constraints, labels). I use the following as realistic planning numbers based on community reports and my own tests on smaller slices:

- Time: NVMe SSD + 8–16 cores + 32–64 GB RAM: ~24–72 hours for a full-history import. On SATA HDDs, expect several days.

- Size (Neo4j store): Expect hundreds of gigabytes. With all nodes, relationships, and helpful indexes, plan in the 600 GB to 1.5 TB range. Your model and indexing choices are the swing factor.

- Free headroom: Keep at least 20–30% extra disk free for checkpoints, temporary files, and future growth.

Academic and industry experience backs up why these graphs get chunky: the Bitcoin ledger is a dense, many-to-many transaction network. Studies like Meiklejohn et al. (2013) and the BlockSci paper (Kalodner et al., 2017) consistently show that graph-style analyses over Bitcoin grow complex quickly and benefit from fast disks and plenty of memory.

Pro tip: Create heavy indexes after bulk import, not before. It consistently saves hours.

Does it work on Windows/macOS/Linux?

Yes—if you meet the dependencies. That said, Linux tends to be the smoothest for long imports, monitoring, and headless runs.

- Linux: Best stability for long jobs. Remember to raise file descriptor limits (e.g., ulimit -n 65535) and tune Neo4j heap/pagecache.

- macOS: Great for development and smaller imports. For large runs, keep an eye on I/O and swap.

- Windows: It can work, but for heavy workloads I usually recommend WSL2 with a fast disk mounted correctly, or just run a Linux VM/bare metal.

Is this maintained? What if the repo is stale?

Always check the repo’s pulse: last commits, open issues, PR activity, and forks. If it looks quiet, you still have options:

- Pin versions: Lock to a known-good commit SHA and a compatible Neo4j version. It’s boring, but reliable.

- Use a fork: Sometimes the most active fork fixes minor breakages or adds small features (e.g., updated Neo4j driver versions).

- Minimal patching: If dependency versions drift, small tweaks to drivers or import scripts often bring it back to life.

For production, I document the exact commit, Neo4j minor version, and JVM settings in the repo so future teammates don’t have to rediscover them.

Can I use this for real-time monitoring or other chains?

It’s built for batch graph building on Bitcoin, not real-time streaming. You can bolt on a custom pipeline (e.g., Bitcoin Core ZMQ → Kafka → microservice → Neo4j) to ingest new blocks as they land, but that’s an engineering project. For other chains, this tool is not the right fit out of the box.

Reality check: Real-time alerting over variable-length graph paths is hard. If “live” is non-negotiable, consider a dual setup: a smaller, fresh-tip cache for the last N blocks and a larger, periodically refreshed historical graph.

What are the alternatives or complements?

If you need hosting, multi-chain, or prebuilt ETL, here are the usual suspects people compare:

- GraphSense: Open-source, focuses on crypto analytics with a robust ETL, commonly used in research and public sector environments.

- BlockSci: Fast analysis library (C++/Python) with its own data structures; great for scriptable research workflows.

- Bitquery: Powerful APIs and GraphQL for many chains; good if you prefer API access over managing your own database.

- Dune: Hosted analytics with SQL; quicker time-to-insight but typically better for EVM-style chains than Bitcoin UTXO graphs.

- Commercial graph datasets: If you need enriched entities, clustering heuristics, and compliance-grade data, managed providers can save months.

Plenty of teams use bitcoin-to-neo4j as their private “ground truth,” then layer an API or dashboard on top. Others prototype in Neo4j, then switch to a hosted provider when stakeholders want always-on SLAs.

Helpful links you’ll want handy

- bitcoin-to-neo4j GitHub repo

- Neo4j Docs (operations, Cypher, performance)

- Bitcoin Core Docs (RPC, config, ZMQ)

One last thing before we move on: Want a clear yes/no on whether bitcoin-to-neo4j is the right call for your stack—and the exact next steps I recommend if it is? Keep reading. I’ll make the call and give you a simple action plan.

Should you use bitcoin-to-neo4j? My verdict

Short answer: if you want full control of a Bitcoin graph inside your own Neo4j, are fine with a batch import, and you live in Cypher, this is absolutely worth your time. If you want a hosted dashboard tomorrow, real-time indexing, or multi-chain out of the box, this won’t match your expectations.

Who will love it vs. who should skip

- You’ll love it if you ask graph-first questions like:

- “What paths exist within 3–5 hops from this address to a known exchange deposit?”

- “Which clusters see the largest 24-hour inflow after a market-moving event?”

- “Where did funds flow after a malware payout or darknet market withdrawal?”

I’ve seen teams use this setup to replicate classic address-clustering heuristics (multi-input, change output) popularized by academic work like A Fistful of Bitcoins, and then validate or refine them with their own rules. Graph-native traversals make these heuristics far easier to implement and audit compared to row-by-row SQL.

- You might skip it if you need:

- Managed infrastructure with instant dashboards and no imports

- Real-time indexing or alerting seconds after a block lands

- Multi-chain ETL without building separate pipelines

In those cases, you’re likely better served by a managed data platform or a service that already streams and enriches blockchain events.

Where this shines is transparency and experimentation. You can see every node, every relationship, and every assumption. That matters when you’re doing sensitive research, compliance investigations, or publishing studies. For example, if you’re evaluating risky flows, you can encode and compare multiple heuristics side-by-side and document exactly why a path was marked suspicious. This kind of explainability is a big deal in AML contexts and aligns with approaches used in research like the Elliptic dataset paper, where graph features are central to classification quality.

Two real-world patterns where a Neo4j-backed Bitcoin graph consistently delivers:

- Ransomware fund tracing. Start from a known ransom address, search for short paths to large services, and quantify volumes over time. Path constraints and time windows help avoid noisy, long trails.

- Cluster hygiene checks. Use the multi-input heuristic to propose clusters, then test robustness by excluding known CoinJoin patterns to curb false positives. Studies have long noted that CoinJoin breaks simple clustering; having the graph lets you encode exceptions cleanly. See background on CoinJoin here: CoinJoin (Bitcoin Wiki).

“All models are wrong, but some are useful.” With blockchain graphs, the usefulness comes from seeing assumptions as first-class citizens you can query and revise.

Your next steps

- Pressure-test on a small network. Spin it up on testnet or a narrow mainnet slice. Confirm node labels, relationships, and indexes behave as you expect.

- Pick one question that proves value. For example: “Show all paths ≤4 hops from Address X to any address tagged as a service or exchange.” Measure runtime and memory.

- Lock in modeling choices early. Decide how you want to represent addresses vs. outputs, change heuristics, and any tags you’ll attach. Consistency now saves rework later.

- Tune memory and indexes. Set Neo4j heap and page cache to match your hardware, and only add indexes that help your common lookups (txid, address, block height).

- Budget for mainnet. Plan SSD storage and RAM based on your target history. Expect a long import; run it during off-hours and monitor logs closely.

- Plan your refresh cadence. If you’re okay with batch updates, schedule regular imports for new blocks rather than chasing real-time.

- Harden and document. Restrict access, avoid exposing Neo4j to the internet, write down your index strategy, and keep a restore-tested backup routine.

If you’re ready to experiment, grab the repo here: bitcoin-to-neo4j on GitHub, and keep Neo4j docs handy while you iterate.

Conclusion

Turning Bitcoin into a graph makes tough questions feel tractable. You can follow the money, cap path lengths, wrap results in time windows, and attach context as properties you trust—not someone else’s black box. That’s the difference between guessing and understanding.

If you need a hosted, real-time, multi-chain feed, this isn’t it. But if you want a sturdy, transparent, “I-can-explain-every-edge” Bitcoin graph that you control, this tool gets you there—and it does it in a way that aligns with how serious research and investigations already work.

My verdict: highly recommended for analysts, researchers, and builders who think in graphs and want their own Bitcoin truth in Neo4j. Start small, prove the query that matters, then scale with confidence.