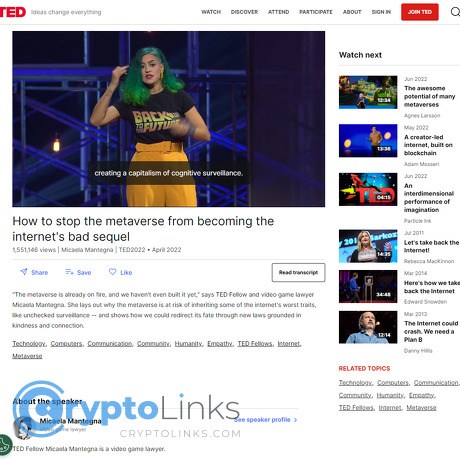

Micaela Mantegna: How to stop the metaverse from becoming the internet's bad sequel Review

Micaela Mantegna: How to stop the metaverse from becoming the internet's bad sequel

www.ted.com

Micaela Mantegna’s Plan to Stop the Metaverse From Becoming the Internet’s Bad Sequel — A No-BS Review Guide + FAQ

What if the metaverse repeats the same mistakes that broke trust in Web2—only this time with headsets, biometrics, and our kids involved? That’s the gut-check I had after watching Micaela Mantegna’s TED talk. If you care about crypto, creators, gaming, or where your identity and assets live online, this isn’t hype. It’s a warning—and a roadmap.

Describe problems or pain

We’re not just building new worlds. We’re stacking new risks on top of old ones. Here’s what’s already showing up across XR, gaming, and “Web2.5” platforms:

- Walled gardens and lock-in: Avatars, skins, and digital goods are locked to a single platform. Think buying a legendary skin in one world and discovering it’s worthless anywhere else. The Epic v. Apple fight put this front and center—closed ecosystems and high fees shape who gets paid and who gets blocked.

- Surveillance-by-default: XR gear can capture gaze, gesture, body motion, and even inferred emotion. Research from Stanford’s Virtual Human Interaction Lab shows motion data alone can uniquely identify users at high accuracy, turning your movements into a fingerprint (Stanford VHIL). That’s not “just telemetry.”

- Shady monetization and dark patterns: The FTC’s record $520M action against Epic Games highlighted coercive UX and privacy failures aimed at kids (FTC settlement). If that’s happening on today’s screens, imagine it in fully immersive spaces.

- Deepfakes and identity hijacking: Synthetic media is exploding across social and messaging. Enterprise fraud data shows a massive spike year-over-year in deepfake attempts (Sumsub 2024 Identity Fraud Report). In voice- and avatar-led worlds, that shifts from annoying to dangerous fast.

- Harassment and weak safety: Early metaverse platforms already show frequent harassment. One watchdog documented incidents occurring every few minutes in social VR spaces (CCDH report). Safety can’t be patched in later.

- Crypto stuck at the door: “Ownership” means little if assets and identity can’t travel. NFTs or credentials without portable standards are just receipts inside someone else’s box. That’s not web3—it’s Web2.5 with a token badge.

The risk isn’t just another walled garden. It’s a walled garden with eye tracking, biometrics, and kids. That’s a different level of responsibility.

Promise solution

I pulled out the signal from Mantegna’s talk and mapped it to what crypto and creators can actually do. You’ll get:

- A quick breakdown of her core ideas and why they matter to builders, traders, and parents

- The key risks—surveillance, lock-in, scams, safety gaps—and how they show up in real products

- What good looks like: interoperability, rights, open standards, safety-by-design

- A practical checklist for users, builders, and investors

- A clear FAQ with the questions people actually ask (not just what platforms want to answer)

Who this guide is for

- Web3 founders and product teams shipping wallets, worlds, or marketplaces

- NFT traders and collectors who care about portability and real utility

- Creators and modders making avatars, worlds, and virtual goods

- Community leads and moderators who manage safety on the ground

- Policy folks setting rules for privacy, kids’ safety, and competition

- Parents and curious users who want to protect their time, money, and data

Quick look at the talk

You can watch the talk and read the transcript here: TED: How to stop the metaverse from becoming the internet’s bad sequel. It’s a punchy warning with a hopeful message: we still have time to steer this ship before it hardens into a bad sequel.

Curious what Mantegna actually argues—and how it translates into concrete choices for crypto, creators, and platforms? Let’s unpack the core thesis next. Ready for the no-BS breakdown?

What Micaela Mantegna actually argues in her TED talk

The core thesis

I heard a simple, urgent message: don’t let a small club of companies own our virtual lives. If the metaverse copies the worst parts of Web2—surveillance, lock-in, and “you’re the product” tricks—we’ll hard-code a more invasive internet for the next decade.

“Build worlds, not walled gardens.”

This isn’t abstract. We’ve already seen how platform taxes and closed ecosystems shape behavior. Apple’s 30% cut rules the mobile app economy and now extends its grip to NFTs in apps. Meta floated a 47.5% take on Horizon Worlds’ creator sales. Steam shut the door on blockchain games entirely. The pattern is clear: when platforms control identity, inventory, and payments, your “ownership” is conditional.

Risks she flags

- Walled gardens and lock-in

Buy a skin in one world; it’s stuck there. Create a community; it vanishes if a platform pivots. We’ve lived this with app stores, game launchers, and social networks. The metaverse raises the stakes—assets, identities, even your social graph become platform collateral.

- Data extraction 2.0: biometrics and emotion

XR tracks how you move, where you look, and how you feel. Privacy researchers warn this data is uniquely identifying and intensely sensitive. Mozilla’s latest Privacy Not Included review calls out VR headsets for aggressive data collection and weak controls. The Future of Privacy Forum details how gaze, hand, and body motion can reveal identity and health traits. In short: this isn’t “just analytics.” It’s a behavioral fingerprint.

- Harassment and safety gaps

VR intensifies presence—good for immersion, worse for abuse. The ADL reports the majority of online gamers experience harassment, and early metaverse case studies show the same patterns showing up in 3D space. “Mute, block, report” after the harm isn’t enough when harassment feels face-to-face.

- Algorithmic bias and opaque moderation

Biased AI doesn’t vanish in XR; it compounds. The landmark Gender Shades study exposed massive accuracy gaps in commercial vision systems for women with darker skin. Now add real-time body tracking and voice analysis—mistakes get more personal, and the black box stays closed.

- Addictive design and dark patterns

Look for loot box logic and compulsion loops dressed up in 3D. Research consistently links loot box spend with problem gambling severity (Zendle & Cairns, 2018), and the UK House of Lords urged regulating them as gambling. XR can make these mechanics feel even stickier.

- Weak creator rights and broken incentives

When distribution is captive, creators get squeezed. We’ve seen investigations into how young devs were underpaid or misled on platforms like Roblox. Move that dynamic into the metaverse and creators risk building castles on rented land.

Guardrails she wants

- Interoperability and standards by default

Avatars, assets, and identities that move between worlds without begging a gatekeeper. Think open file formats, portable inventories, and platform-agnostic login. OpenXR for runtime compatibility, standardized 3D formats, and clear “assets in, assets out” commitments are the baseline—not a “phase two.”

- Strong digital rights

Privacy, portability, and the right to be pseudonymous. GDPR already recognizes data portability; biometric laws like Illinois’ BIPA add teeth for face and voice. XR needs explicit limits on gaze/pose/emotion data and real consent that isn’t buried in a modal.

- Safety-by-design and transparency

Proactive safety tools (personal boundaries, voice masking, proximity controls) turned on by default. Clear incident reporting and response times published openly. The UK’s Age Appropriate Design Code is a strong blueprint for child-first defaults.

- Competition and antitrust awareness

Don’t bake the app-store trap into headsets and worlds. The EU’s Digital Markets Act pushes interoperability and curbs self-preferencing—pressure that XR absolutely needs.

- Accessibility and inclusion

Subtitles, high-contrast modes, motion sensitivity toggles, and keyboard parity aren’t “nice-to-haves.” W3C’s work on accessible XR gives practical guidance that should be required reading.

Why her angle stands out

Most big-deck “metaverse” talks hand-wave the messy parts. Her lens comes from games: modders, community managers, indie devs, creators getting paid (or not). That’s where real culture is set. Look at how a Steam policy change can make whole genres disappear overnight, or how Epic’s UEFN profit-sharing can suddenly put fairer economics on the table. These are not hypotheticals—they’re the rules of engagement the metaverse will inherit unless we change them.

And here’s the emotional truth that stuck with me: when presence is this strong, the harm and the good both get amplified. That’s exactly why the default settings matter. We shouldn’t have to choose between wonder and safety.

So what does all this mean for wallets, NFTs, and on-chain identity—practically, not theoretically? Keep going; the next part breaks it down with receipts.

Why this matters to crypto and web3

Ownership that actually travels

“You own it” means nothing if your avatar, skin, or credential gets stuck inside one platform’s walled garden. I’ve seen too many “metaverse” pitches that mint NFTs, then quietly trap them in a single world with custom file formats and closed inventories.

Real ownership is portable. That looks like:

- Assets that load anywhere: 3D wearables as glTF/USD with standardized materials, not bespoke importer hell.

- Wallets as passports, not prisons: support for CAIP-10 identifiers and WalletConnect so accounts work across chains and apps.

- Identity you control: W3C DIDs and Verifiable Credentials to carry reputation and badges between games without doxxing yourself.

- Token-bound gear: ERC-6551 (token-bound accounts) so your avatar NFT can “own” its wearables and achievements on-chain.

We already see glimpses: Ready Player Me avatars work across thousands of apps; Lens and Farcaster show portable social graphs across clients. But too many “metaverse” integrations still behave like the old model: import in, never out. If a platform won’t let you bring and leave with your stuff, it’s not ownership—it’s rental with extra steps.

“Portability is the difference between a marketplace and a maze.”

Privacy as a feature, not a footnote

XR data is not just clicks—it’s your body. Head/hand motion, gaze, gait, micro-expressions, even room scans. That data can fingerprint you and infer mood or health if mishandled. Researchers have shown that short windows of motion/gaze data can uniquely identify most users with high accuracy. If that doesn’t make you flinch, it should.

What to build into the stack:

- Prove without showing: zero-knowledge proofs (zk) for age checks, access rights, and score thresholds. Show you qualify, reveal nothing else.

- Selective disclosure by default: BBS+ or SD-JWT style credentials so users can share the one attribute needed—nothing more.

- Data minimization: store less, for less time, with clear, revocable consent. “Telemetry off unless you opt in,” not the other way around.

- Pseudonymity as a norm: let people separate identities across worlds without losing reputation. Tie creds to keys, not legal names.

If you want a gut-check on the current state, Mozilla’s Privacy Not Included has repeatedly flagged XR devices for aggressive data collection. Let’s not rebuild Web2’s surveillance machine with cameras strapped to our faces.

Governance that scales

Moderation, rules, and creator payouts can’t be a black box. We need shared power models that don’t grind to a halt.

- Hybrid governance: on-chain proposals and treasuries with off-chain nuance. Think Snapshot signaling + Safe/Reality.eth for execution.

- Bicameral or multi-stake views: look at Optimism’s Token House and Citizens’ House as a template to balance capital with contribution.

- Independent dispute resolution: systems like Kleros can arbitrate creator disputes or marketplace takedowns with transparent evidence and appeals.

Games and worlds evolve fast. Put policy in code where possible, but keep human context in the loop for edge cases. The metaverse will have them daily.

Creator rights that work

Creators shouldn’t have to pray a marketplace “honors royalties.” We’ve seen how optional standards get ignored when incentives shift.

- Clear licensing: adopt plain-English, enforceable licenses like the “Can’t Be Evil” NFT licenses to define rights before conflicts start.

- Provenance by default: use ERC-721/1155 with on-chain metadata commitments so authorship can’t be faked.

- Royalties that travel: implement ERC-2981 plus a Royalty Registry so marketplaces can’t plead “technical limitations.”

- Mod-friendly policies: let creators extend each other’s work with revenue splits encoded at mint. Collaboration shouldn’t require lawyers and luck.

When royalties became a toggle, creator income cratered across some markets. If we want vibrant economies in virtual worlds, value flow has to be predictable and portable—not marketing fluff that vanishes in a bear market.

Scam control and safety

Rug pulls, fake airdrops, malicious files, wallet drainers—the usual crypto villains are already wearing avatars. Safety isn’t pop-ups and fine print; it’s smart defaults and clear paths to recover.

- Wallet hygiene: use per-world permissions, session keys (ERC-4337), and limited allowances (Permit2) instead of “unlimited forever.”

- Fast revocation: give users one-click kill switches and link to tools like Revoke.cash and Etherscan’s Token Approval Checker.

- Trusted identity cues: verified ENS names, VCs for creators, and consistent SIWE (EIP-4361) prompts to fight phishing.

- In-world reporting that works: visible reporting, quick triage, and transparent outcomes. No one should guess if a bad actor was actually removed.

And yes—deepfake avatars and voice skins are here. Treat verified creator credentials like seatbelts: most days you won’t need them; the one day you do, you’ll be grateful.

The mindset shift

Let’s be honest: “number go up” built clicks, not trust. In spatial, social worlds, trust is the product. The playbook that wins here:

- Utility over speculation: assets should unlock access, identity, or creativity—not just resale drama.

- Portability as a KPI: brag about import/export and cross-world usage, not vanity metrics.

- Privacy as a sales feature: make “we don’t store your body data” a headline, not a buried policy line.

If we get this right, crypto becomes the connective tissue of fair virtual economies. If we don’t, it becomes a shiny sticker on walled gardens 2.0.

Want the exact steps I use to test whether a platform walks the talk—privacy defaults, interop, creator payouts, and safety UX? Keep going. The next section breaks it into a practical checklist you can run this week.

The metaverse we should build: a practical checklist

“The future is already here — it’s just not evenly distributed.” — William Gibson

I want a metaverse my kids can explore, creators can thrive in, and we can trust with our wallets and our time. Here’s the no-BS checklist I use when I review platforms, tools, and tokens. Steal it, remix it, ship it.

For users

- Use pseudonyms and split identities. Treat each world like a different neighborhood. One name for work communities, another for gaming, another for collecting. If something leaks, it won’t trace everything back to you.

- Lock down headset and app permissions. Turn off “always-on” analytics and precise tracking unless you need it. Eye, hand, and head motion can fingerprint you across sessions; academic labs such as Stanford’s VHIL have shown how uniquely identifiable motion data can be in XR.

- Use a hardware wallet as your vault, and per-world burner wallets for new platforms. Keep the vault cold; use burners for experiments, claims, and unknown contracts. Rotate burners regularly.

- Revoke shady permissions. Check and clean token approvals before they bite you. I run periodic checks with tools like revoke.cash.

- Learn fast threat-model basics.

- Phishing: no seed phrases or passkeys in chats, ever.

- Fake airdrops: if “setApprovalForAll” pops up unexpectedly, stop.

- Malicious files/mods: only download from verified creator pages; scan archives before opening.

- Social engineering in VR: if a stranger urges you to rush-confirm a transaction, pull the headset and verify on a separate device.

- Set session boundaries. Use comfort settings (snap turn, vignette), time limits, and quick-exit shortcuts. You’ll enjoy the experience more—and stick around longer—when you’re not fried.

For builders

- Privacy by default. Collect less. Turn telemetry off unless users opt in. Summarize what you collect in one screen with plain language and a toggle per data type (voice, gaze, motion, location).

- Ship open, portable assets and identities. Support standard formats:

- 3D: glTF 2.0 for assets, USD for complex scenes and pipelines

- XR: OpenXR for runtime compatibility

- Crypto: ERC‑721/1155 for assets, 4337 for smart accounts, 6551 for token-bound accounts

Real-world proof: avatar systems like Ready Player Me lean on glTF to move across thousands of apps. That’s the spirit—make it normal.

- Age-appropriate design. No dark patterns. If kids might use it, follow the UK’s Children’s Code. No loot-box mechanics without clear odds, no nag loops, no “confirmshaming.” The EU’s DSA is already pressing on manipulative design.

- Interop testing is a release requirement. Before you ship:

- Import/export test: round-trip glTF/USD assets between Unity/Unreal and a reference viewer

- Wallet test: mint/transfer with at least two wallet providers and confirm metadata renders in a neutral explorer

- Avatar test: spawn an external avatar across two different runtimes (OpenXR-compliant) without manual fixes

- Publish transparency reports. Quarterly, not yearly. Include moderation actions, median response time to abuse reports, takedown appeal outcomes, and creator payout stats.

- Accessibility from day zero. Follow WCAG 2.2 guidance and XR-specific comfort options: captions, color contrast, locomotion alternatives, motion toggle, UI scale, and readable fonts.

- Safety-by-design beats reactively moderating. Preempt harm with block/mute at system level, safe personal spaces, rate limits on DMs, and default proximity voice limits. The Australian eSafety framework has practical patterns: Safety by Design.

For platforms and policymakers

- Make interoperability and portability the rule, not the exception. Data and asset portability should be as normal as copy/paste. Privacy laws already support this—see GDPR’s Article 20 on data portability.

- Guard against lock-in. No exclusivity contracts that block creators from selling or moving their own work across worlds. If your platform wins, it should be on merit, not trapdoors.

- Due process for moderation. Users deserve a clear code of conduct, reasons for enforcement, an appeal path, and auditability. Publish policy change logs and enforcement case studies (with privacy safeguards).

- Accessibility isn’t a bolt-on. Performance must hold on mid-tier devices, captions must be accurate, and UI must be operable without perfect vision, hearing, or motor control. Give users motion sensitivity controls and “comfort-first” defaults.

- Biometric data needs special protection. Gaze, posture, and emotion proxies should be opt-in, locally processed when possible, and never sold. Default to aggregation and differential privacy for analytics.

Metrics that actually matter

If a platform only brags about DAUs and session length, I assume misaligned incentives. These are the north-star numbers that tell me we’re building the right thing:

- Time well spent, not just time spent. Short in-headset surveys (1–2 taps) after sessions: “Was this session valuable?” Track weekly “net positive sessions.” If this drops while usage rises, you’re probably leaning on compulsion, not quality.

- Safety outcomes that move. Median time-to-action on abuse reports; repeat-offender rate over 30/90 days; percentage of users who feel safer after using built-in tools. Publish the numbers in your transparency report.

- Creator earnings and predictability. Share of revenue paid to creators, payout latency, and churn among top and mid-tier creators. If the middle class is shrinking, your economy is brittle.

- Portability rate. The percentage of items and identities that can exit and re-enter your platform using open standards. Real portability shows up in logs, not pitch decks.

- Consent quality. Opt-in rates for telemetry when explained in plain language; percentage of users changing defaults after onboarding (a sign they understood their choices).

The last thing any of us want is a high-res rerun of the old internet with worse data trails. I keep asking teams a simple question: if you couldn’t lock users in, would your product still win? The honest answers tell you everything.

Want to see the moments that lit this fire for me—and the lines I replay when someone says “walled gardens are fine”? You’ll want what’s next.

Key moments from Mantegna’s talk worth replaying

The opening framing

I rewatched the first minute twice. It’s a gut-check: if we don’t set guardrails now, we’ll hard‑code the worst parts of the internet into something more intimate—headsets on our faces, sensors on our hands, cameras in our rooms. That’s not fear-mongering; it’s product reality.

“If we don’t set the rules now, the default settings will set them for us.”

What does “more intimate” actually mean?

- Biometrics as product data: Eye movements, hand tremors, posture, room scans. These aren’t clicks on a screen—this is your body acting as the interface. Reports like Mozilla’s Privacy Not Included have repeatedly flagged VR headsets for broad data collection and vague retention policies.

- Inference at scale: Eye-tracking companies such as Tobii explain how gaze patterns reveal attention and emotion states. That’s great for accessibility and performance (foveated rendering) but also a goldmine for ad-tech if misused.

Her point lands because the stakes are tangible: XR doesn’t just know what you click—it can guess what you feel.

The rights wishlist

When she lays out “privacy, interoperability, user control,” it doesn’t come off as abstract policy talk. It’s a product brief. You can feel the years of game community lessons baked in.

- Privacy that’s real-world ready: Apple’s Optic ID is stored on-device and not shared with apps. That’s the kind of default we should expect across the stack: on-device first, minimal data, clear toggles.

- Interoperability that actually ships: Support for open asset formats like glTF and USD, and runtime standards like OpenXR. If your avatar, items, and credentials can’t move, “ownership” is cosmetic.

- User control without buried settings: No more 12-click privacy journeys. Clear, up-front choices with human language—especially for families using shared devices.

It’s easy to think privacy and UX are in tension. She flips that: the best UX is the one that won’t betray you later.

The walled‑garden warning

This is the part builders and investors hate to hear and need to hear. If a handful of platforms own identity and inventory, creators lose leverage, and users rent everything forever.

- Creators at the switch’s mercy: Remember when Minecraft banned NFTs? Overnight, entire community projects evaporated. Whether you agreed with the policy or not, the takeaway was brutal: if your economy lives at someone else’s discretion, it can vanish with a blog post.

- In-app tax cages: Apple’s rules on NFTs and “digital goods” still funnel transactions through in-app payments with a steep cut, making portable markets expensive or impractical. See coverage like The Verge for the contours. Monetization design can be a lock-in strategy.

- UGC that can’t leave: Most major platforms keep creator assets and avatars captive by default. Good for retention, bad for an open metaverse.

Cory Doctorow calls this the platform “enshittification” cycle: “First, they’re good to their users; then they abuse users to make things better for their business customers; finally, they abuse those business customers to claw back all the value for themselves.”

That quote loops in my head every time I hear “open ecosystem” in a deck. If it’s open, show me the export button.

The call to action

The closer is refreshing because it doesn’t outsource responsibility. No “someone should do something.” It’s all of us.

- Users: Ask one question before you commit time or money—can I take my stuff and my identity elsewhere?

- Builders: Ship with open formats on day one, and make data minimization the default, not a settings menu hobby.

- Policymakers: Favor interoperability and portability mandates over performative fines that arrive three years too late.

- Investors: Reward teams that prove portability, safety outcomes, and transparency—not just glossy MAUs.

I left the talk with a simple filter for any “metaverse” pitch: if it can’t explain portability, privacy defaults, and creator leverage in one page, it’s not ready.

Still wondering how this connects to crypto, whether we need new laws, or if NFTs are even necessary here? Keep going—I’ve pulled together the questions people actually ask and straight answers next.

FAQ: Everything you wanted to ask (and what people usually search for)

What’s Micaela Mantegna’s main point?

We still have a window to stop the metaverse from turning into the internet’s bad sequel. That means hard-coding openness, privacy, and safety now—before a few giants lock down identity, assets, and economic rails. If you’ve watched gaming evolve, you’ve seen the pattern: amazing creativity, then slow enclosure.

Translation: don’t let “you’re the product” business models become “your eyes, voice, and body are the product.”

How does this connect to crypto?

Crypto is the toolset for portable identity, assets, and payments—if we use it right. A skin you buy in one world should be yours across many, the way your email works across providers. That only happens with open standards and privacy by default.

Example: a wallet that supports ERC‑721/1155 for assets, 4337 for smart wallet UX, and 6551 for avatar-bound accounts could move your inventory and permissions between worlds. Without that, we get “Web2.5” tokens stuck behind platform walls—think Roblox items that never leave Roblox, or in-app NFT experiences subject to app store rules.

What are the biggest risks?

- Surveillance-by-default: XR devices capture gaze, body motion, voice, and surroundings. Privacy researchers and groups like Mozilla flag how this data can profile you with scary accuracy. See Mozilla’s Privacy Not Included: VR Headsets.

- Lock-in: Closed identity systems and proprietary formats turn “ownership” into a lease you can’t move. If you can’t export your avatar or assets, you don’t have leverage.

- Harassment and safety gaps: Social VR repeats gaming’s worst behavior unless safety tools are front-and-center. The ADL’s 2023 report shows abuse is still widespread in online play.

- Scams and rug pulls: Crypto scams aren’t theoretical. Chainalysis tracks billions lost each year; check their Crypto Crime reports for the latest patterns.

- Dark patterns and loot-box economics: Manipulative UX works on kids and adults alike. The UK’s review of loot boxes called for stronger consumer protections.

- Opaque moderation and bias: When enforcement is a black box, users don’t trust outcomes. The Santa Clara Principles outline basic transparency and due process.

What does “interoperability” actually mean here?

It means your stuff, identity, and relationships aren’t trapped.

- Assets: Export/import 3D items in open formats like glTF or USD.

- XR runtimes: Build against OpenXR so apps can run across headsets.

- Identity: Use interoperable IDs (e.g., W3C DIDs) that aren’t owned by any single platform.

- Social graphs: Allow export/import and consent-based portability—no hostage-taking.

In practice: sign in with your wallet or DID, load your avatar in glTF/USD, and have permissions follow you across worlds without re-KYC’ing everywhere.

Is the metaverse dead?

No. The hype fell, the building didn’t. Headsets keep iterating, engines like Unity/Unreal and open stacks are standardizing, and creators ship cross-world content daily. This is exactly when defaults get set—either open or closed.

Do we need new laws?

Some, and clearer guidance for XR specifics. Biometric data needs strict limits; regions already recognize this. The EU’s GDPR Article 9 treats biometrics as sensitive. Illinois’ BIPA is a template for explicit consent and penalties. We also need portability rights with teeth, guardrails against anti-competitive lock-in, algorithmic transparency, and strong protections for kids.

Bottom line: we can adapt a lot of existing privacy and consumer protection law, but XR’s constant sensing, gaze data, and spatial mapping create edge cases regulators must address.

How can parents keep kids safe in VR?

Use what already exists, and be proactive:

- Supervised accounts: Set up platform-level supervision (e.g., Meta Quest Supervision).

- Limit data sharing: Turn off always-on voice/chat/gaze logging where possible; keep profiles private.

- Session length: Use timers and schedule breaks—VR fatigue is real for eyes and balance.

- Content filters and boundaries: Approve apps, use safe zones/guardians, and teach quick-mute/quick-exit.

- Talk early, role-play often: Practice reporting and leaving bad situations. Common Sense Media’s guide is practical: Parents’ Ultimate Guide to VR.

Are NFTs necessary for the metaverse?

Not universally. NFTs shine for verifiable ownership, provenance, and secondary markets—but they need real utility and portability. A “receipt” with no export path is just a JPEG with extra steps. Also, off-chain items can work (think Counter-Strike skins), but you’re trusting one platform’s rules, payout terms, and ban policies. Pick the trade-offs with eyes open.

What should builders do first?

- Ship privacy-by-default: Collect the minimum. Make opt-in explicit. Publish data maps. Mozilla’s VR privacy findings are a good gut-check: see the red flags.

- Support open standards: 3D assets via glTF/USD, XR via OpenXR, identity via DIDs; assets/payments via 721/1155/4337/6551.

- Make safety a feature, not a menu item: Onboard with consent flows, real-time blocking/reporting, and easy visibility settings. Publish transparency reports with metrics that matter (resolution speed, repeat offender reduction).

- No dark patterns: The FTC has warned about manipulative design—read their guidance: Bringing Dark Patterns to Light.

Where can I watch the talk?

On TED with a transcript. Try this search to jump right in: Micaela Mantegna on TED. It’s short, sharp, and worth your 10 minutes before you commit to any “metaverse strategy.”

Quick question before you go: want to see which platforms actually walk the talk on portability, payouts, and privacy? I’ve got a shortlist and what I’m tracking next—come with me to the next section.

What I’ll be watching next

I’m tracking three signals that will tell us whether we’re building a better metaverse—or just reskinning Web2:

- Real interoperability commitments: I want to see platforms prove “assets in, assets out” with live demos and docs—not blog posts. Ready Player Me letting one avatar show up in thousands of apps is a strong signal. Widespread use of glTF/USD for 3D and OpenXR for runtimes is another. The Metaverse Standards Forum has the right names in the room; I’m watching who ships, not who attends.

- Privacy defaults on XR devices: Eye and hand tracking is gold for engagement—and a minefield for abuse. Multiple peer‑reviewed studies have shown that even short spans of head/hand motion can uniquely identify most users, which means minimize, sandbox, and keep it on‑device by default. I’m watching whether headset makers block app access to raw biometrics, force granular permissions, and clearly label what leaves the device.

- Creator payouts and portability as standard: Epic’s Creator Economy 2.0 pays out based on engagement across Fortnite islands. Roblox continues to pay out hundreds of millions to its developer community. Great—now show that earnings and items don’t vanish at the platform border. I’m looking for export tools, portable licenses, and clear on-chain provenance for big-ticket items.

I’ll also keep an eye on policy with teeth. The EU’s DMA/DSA enforcement and any biometric-specific privacy rules will set the tone. If gatekeepers must allow alternative app stores, sideloading, and data portability in practice, that’s the kind of pressure that makes “open” the default.

If you’re a builder or creator: next steps

- Ship portability and publish it: Support import/export for 3D assets (glTF, USD/USDZ), identities (W3C DIDs/VCs, SIWE), and inventories (ERC‑721/1155, 6551). Publish an interop matrix showing what you support and test against. If you can do a live “teleport” demo—from your world to a competitor’s—you’ve earned trust.

- Kill dark patterns: No pre-checked toggles. No “gotcha” subscriptions. No manipulative loot boxes. Use age-appropriate design and friction where safety demands it. If consent isn’t as easy to withdraw as it is to give, it isn’t consent.

- Map your data flows and cut what you don’t need: Treat data like a liability. Keep gaze, pose, and biometrics on-device; if you must process, aggregate and anonymize. Publish a “data nutrition label” so users know what’s collected, where it lives, and for how long.

- Align incentives with outcomes that matter: Reward safety, quality, and creator earnings—make them ranking signals. Tie bonuses to time well spent, fast resolution of abuse reports, and repeat‑offender reduction, not just DAU/MAU.

- Make safety real, not reactive: Ship in-world reporting, block/mute that actually sticks across sessions, and audited moderation queues. Give creators tooling to set community rules and filters per space.

- Prove it with transparency: Quarterly reports on moderation latency, creator payouts, portability success rates, and privacy incidents. If it isn’t measured, it isn’t managed.

For users and investors

- Back platforms that prove openness: Look for OpenXR/WebXR support, standard 3D formats, and working export buttons. If assets can’t leave, assume they never really belong to you.

- Make privacy a buying decision: Prefer headsets and apps that process sensitive signals on-device and default to off for biometrics. Granular permission prompts are a good sign; vague “improve the experience” toggles are not.

- Check the money flow: Read payout terms, revenue shares, and clawbacks. Favor platforms that show audited numbers, not just screenshots. Bonus points for portable licensing so creators aren’t trapped.

- Watch for wallet neutrality: Can you use a third‑party wallet and separate identities per world? If a platform demands one account for everything, that’s a red flag for lock‑in and tracking.

- Trust, but verify portability: Test an export before you invest heavy time or money. Move an avatar, a wearable, or a credential to another app. If it breaks on day one, it’ll break when it matters.

TL;DR: The path I’m betting on

If I can’t take it with me, I don’t buy it.

The metaverse doesn’t have to become the internet’s bad sequel. If we keep pushing for open standards, strict privacy defaults, and creator economics that don’t collapse at the border, we won’t be stuck patching a broken system later.

Let’s reward teams that prove interoperability in public, treat biometric data like radioactive waste, and share real safety metrics—not vibes. That’s the version worth our time—and our wallets.